After a particular workday, I sat down one evening and thought to myself: what would it take to get developers to actually produce highly maintainable code? Why isn’t this something that is always produced? Why have I seen these codebases that manage complexity so poorly and make them difficult (and unpleasant) to work in? What is lacking in the environment of these development teams? Of course each developer and company environment is different, so I have tried to restrict my thoughts to those that are somewhat more common and generalizable, rather than obscure situations (like a team lacking any version control).

So here it goes.

Lack of knowledge & experience, lack of time, lack of commitment & consequence, lack of tests. These are the key factors that I have observed that drive poor maintainability in enterprise business software. I will briefly go over each one and explain how I think it contributes to poorly maintained code and what you could do about it. For reference I use the definition found in ISO25010 as a foundation for my definition of maintainability.

Lack of time

Addressing maintainability issues takes time. For those used to working with poorly maintainable solutions this time could be considered as ‘extra’ on top of the time needed to do your regular work. When building a new system this is the:

- time needed to review the architectural fit of the new features and re-architecture where needed, instead of creating/building upon hacks

- time needed to design a solution that adheres to principles that optimize for maintainability

- time spent writing automated tests, be it upfront (Test-Driven Development) or directly after

- time spent writing documentation

Or when addressing technical debt of an existing system this is the:

- Time spent redesigning & refactoring parts of the system

- Time spent writing extra automated tests before refactoring

- Time spent performing manual tests (either by developers or dedicated testers) in cases where automation does not cover it

- Time spent writing documentation of that what still ‘needs to be’ documented

A common complaint in the software industry is that management does not allocate enough time to work on the technical issues of the system. Or they deprioritize this work to focus on new features and bugfixes instead because that has visible impact and thus creates immediate tangible value.

If you find yourself in that position, realize it is your professional duty, as a software developer, to do your job well. That means you need time to do that job well.

So if your team uses time estimates, next time you give one for a long-lived feature, take into account the time needed to create a highly maintainable solution. Rather than the time needed to create a bare minimum solution that just implements the feature and then gets manually tested on a test environment.

Note if you or your team have been doing such a bare minimum for a while and you are now reaching a point where the pain is causing you to want to address it, the extra time needed to make maintainable solution will be more at first, and less once you get more experienced with it.

If you are working in iterations (like agile/scrum) you’ll likely give estimates every 2/3/4 weeks. Consider starting off by adding a relatively high offset to your normal estimates and then review how accurate you were at the end of the iteration and adjust down if needed. So if you estimate a feature would normally take 16 hours in your normal way of working, consider adding ~40% (pick what suits you) to that figure and give an estimate of 22 hours instead. The same could be applied to when you’re using storypoints or t-shirt sizing. But these are already better than hour estimates because of their inherent vagueness.

If you are a software vendor and you have to give a time estimate as part of an impact analysis for a new feature request and your estimate is used as input to calculate cost for a new feature, you may not have the luxury of being grossly off by your estimate and adjusting down next iteration, because perhaps your estimation is translated to a dollar-quote only once and the cost of that new feature may be very high compared to previous paid feature requests.

Even in this case I would advocate that a too large estimate and thus being able to deliver earlier with higher quality is typically better for software quality than a too low estimate and having to delay delivery. Especially because a too low estimate will increase your lack of time issues and may trigger practices that result in poorly maintainable code.

So if all of this ‘maintainable solutions stuff’ takes more time, aren’t poorly maintainable solutions inevitable? Yes. Every system will eventually have technical debt and pieces that are less maintainable than others. The goal is to minimize the impact of this. It helps if you can reduce the damage of these poorly maintainable areas by keeping them isolated and decoupled from most of your system.

Key takeaway: before, during and after a feature is technically implemented, software needs constant refining and refactoring to keep it highly maintainable and this should be incorporated in any planning that uses time estimates.

Lack of tests

Automated tests are one of the biggest enablers of addressing maintainability issues. That isn’t to say that software with an extensive automated test suite has no maintainability issues. But it means software without automated tests or with a very limited amount, can really hold the system from being refactored and thus from being more maintainable. With a good test suite you can refactor to heart’s content and gain the confidence that you haven’t broken or changed anything (that what is tested, anyway).

Some tips on addressing this:

- If you have an existing codebase with a tiny suite of automated tests, or none at all, go now and spend some time on expanding it. Perhaps you may feel it is quite unstimulating to write tests for an existing system, but it is an worthwhile investment. Every minute you soldier on with those tests is going to boost you and your team’s confidence in the future. Be the hero!

- If you have a large project with very few tests and you have to start somewhere, start at the hotspots. The hotspots are the areas of the codebase with high complexity and are the most frequently changed and thus have a high chance of breaking.

- Keep automated tests black-boxed as much as possible so you are not coupled to implementation details. Failing this would in fact prevent you from refactoring because you will get failing tests as soon as you try to refactor anything.

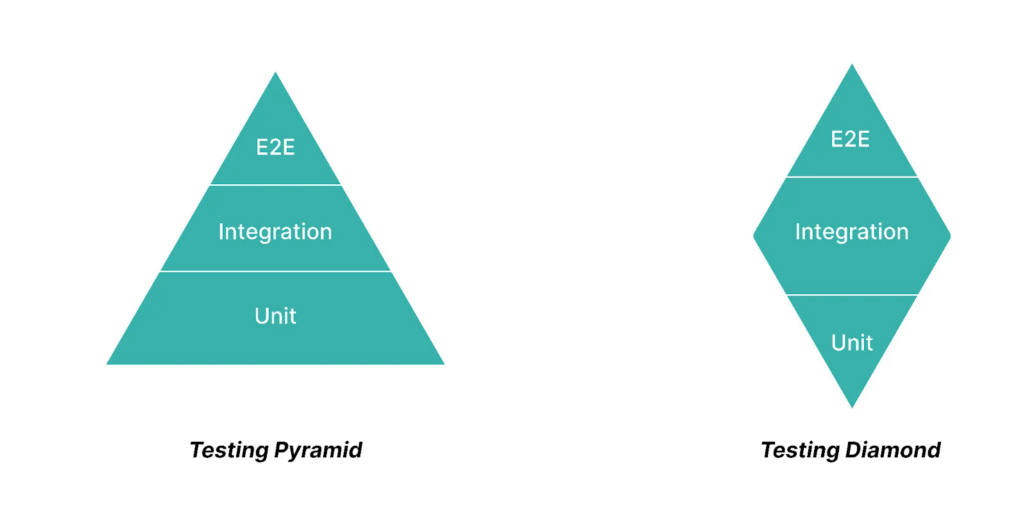

- Don’t bother with small concerns like unit testing getters and setters, as some TDD purists might show in tutorials. Write bigger tests (integration tests if you will) where you have to, if that makes more sense for your existing project.

- Start denying logic changes/pull requests that don’t add any automated tests. You can use static code analysis tools like SonarQube/SonarCloud in CI pipelines to enforce this

- For legacy systems, look into characterization tests. Write tests with the expected (known) behavior of your application.

- And lastly, high code coverage does not necessarily imply an excellent test suite. You can still be missing a lot of edge cases even though your tests have ‘touched all the lines’. This is where testing experience becomes important. If you’re feeling experimental and want automated solutions for this edge case problem you could look at mutation testing.

Note I have an article in the works that describes how you can be pragmatic with automated tests: When to unit test and when to use integration tests with minimal friction. Stay tuned

Lack of knowledge & experience

When software developers are not (frequently) exposed to concepts regarding code quality and maintainability they may not be aware of “what their codebase could’ve been”. They might not know what makes it less or more maintainable. By now they may simply be used to spending quite some extra time when modifying the system. They’ll be introducing the usual workarounds and hacks in order to create and integrate new features. And so the system grows in complexity that slowly makes it harder and harder to work with. This may be quite normal for them, just the nature of the work, and they may have grown with the system this way.

It’s also possible to be unaware of the concepts but still have a gut feeling to detect poorly maintainable code. This is something that experience can bring.

I am sure most programmers with a few years of experience can ‘feel’ when the code is decent and when it feels kind of iffy and dirty. Perhaps it may not be immediately apparent while you’re writing it, but it sure will be when you have to go and interact with it later. And when this cycle happens to you several times, your brain will likely start to notice a pattern.

However, I’ve observed that just because a developer can sense a possibly bad pattern, they do not always know what they should have done instead. They might just intuitively know it is not optimal, but then proceed anyway. There’s a reason why antipatterns exist.

Now, knowing how to design a good solution requires previous experience or educating yourself. And so this ties into the issues of lack of time and also a lack of commitment. One reason a bad approach could be taken would be because it’s a shortcut and it saves a developer (a lot of) time and effort from having to invest in the research about more maintainable approaches.

Managing complexity requires education

Poorly maintainable code is often highly complex. Managing complexity is a topic of its own and the world of advice/training surrounding highly maintainable code is vast. It’s good that there are a lot of resources these days. But it does not take away the fact that learning to manage complexity is going to require quite some time.

It’s not like reading a 15 minute article about SOLID principles is going to solve all of your maintainability problems (it could be a start on the topic though).

You will have to spend time and keep learning as you go. Some examples of resources that you can use to learn are books, articles, videos (conferences, individual tutorials), forums like StackOverflow/SoftwareEngineering-StackExchange, HackerNews and Reddit and of course paid trainings/courses. It would be great if your company organizes relevant trainings for the whole dev team. Internal presentations or even ‘reading clubs’ are also a good way to do knowledge transfer as well.

Of course, getting to work with someone that already has a lot of experience in managing complexity would be a fantastic way to learn too. One tip: don’t be too quick to judge how much you can learn from someone just by looking at their job title seniority or professional years of experience. Even senior developers with many years of experience may not have much more experience with managing complexity techniques than someone with 2 years of experience).

So the key takeaway is to make sure you and your team invest in gathering knowledge and experience about managing complexity and thus highly maintainable solutions and educate each other where needed.

As explained in the paragraph about lack of time, strive to get some of that extra time to do all this extra learning on the job, as you work on features.

Lack of commitment/consequence in the culture

In my opinion this one’s the most damaging. I’ve seen it happen where a software engineer has enough knowledge to design a highly maintainable solution (doing it the way ‘it should be done’), has enough time allotted and the system has automated testing. Yet they still choose to go for a poorly maintainable solution for the new feature they introduce. So why is that? Is it just a bad developer (like a bad apple)? Or is there something else at play? It could be a lack of commitment and I have observed several variations.

Lack of consequence

First of all, this could in fact be due to a lack of consequence. In situations where software quality per individual developer is not measured at all nor brought up at performance reviews, an individual developer may not be incentivized to create highly maintainable solutions. Some people even think that if they create complex software that only they can maintain, it gives them job security. This lack of consequence is present when there is no oversight or checks at all.

One way to address this, that works even if there is only a solo developer and no dev team (yet), is to introduce a simple static code analysis tool in a CI pipeline with a build breaker (a tool like SonarCloud/Qube and/or a linter). Static code analysis is of course not the end-all of ensuring high maintainability (the metrics are typically quite basic) but it can be a good start to address the lack of consequence. After all, a small part of the maintainability will now be measurable and can(should) be viewed by project management/other developers. Another consequence is that one is now forced to fix one’s maintainability issues if they want to deliver a successful build.

Lack of commitment

Second variant: this could be due to a lack of commitment in the development team’s culture.

A common guard against poor code quality of an individual developer is peer reviews by team members. But if high software quality is not inherently part of the culture of the team and they are not committed to strive for it, they may be inclined to accept a poorly maintainable solution. Especially if time is of the essence, or maybe they don’t want to create a possible interpersonal conflict.

That last one could occur especially in cases where developer-autonomy is high and peer reviews are done at a late stage (so a developer has already spent days implementing a solution).

If during peer review one concludes that the implemented solution does not adhere to the maintainability standard one would like to see, one may still be inclined to accept the solution in order to not force the developer to throw away days of their work and make them re-implement the whole solution differently.

I would say that this is a preventable problem by having peer reviews more frequently and making sure high standards regarding maintainability ‘live’ amongst the team (part of their culture).

But if you do find yourself in a situation like that and the dev team has already agreed upon following those high standards, by all means, have the developer re-implement the solution (& of course provide useful feedback). That way the developer can learn and improve for future solutions.

If re-implementing causes a delay of delivery (lack of time), be pragmatic; if you can, just adjust the deadline. Don’t think twice about it, it is for the sake of software quality, it is not something trivial to just ignore.

If delaying is not possible because delivery is mission-critical, sure don’t delay. But have an actionable plan in place for addressing it. Accept the technical debt you’ve now incurred and log it. Make it part of the next scheduled workload/iteration. Increase the offset on future related time estimates so it incorporates fixing that technical debt.

Make sure however: never accept a poor solution without proper (written) justification and a plan in place. Don’t make any exceptions to this rule. Otherwise you’d lower the standards of the system and the lack of commitment becomes more present. This may lead to the broken window theory.

Also try educating the PO/PM (or whoever is planning work) on the value of software quality in the important parts of the system. While it may sound idealistic, this is to get buy-in so possible planning adjustments due to maintainability issues are not something unheard of and cause potential outrage.

Lack of power

An interesting variant of lack of commitment is the feeling of a lack of power. I’ve typically observed this when technical debt on a project is high and senior developers have not been able to address it. Then a new developer comes aboard, they can inherently sense the technical debt but do not feel empowered enough to address it for various reasons. For example, not having enough system & domain knowledge yet (Of course a lack of tests could play a role here too), not wanting to disrupt the status quo and wanting to fit in and follow the example of the ‘seniors’.

So how could one address this lack of power? In any dev team the seniors/tech leads fulfill an inspiring role. Ultimately it is up to them and management to commit to higher standards of maintainability. As a senior, make sure not just other seniors but the newer developers get to review your code as well. This will help cultivate the culture of striving for high maintainability across the whole team and empower developers to make decisions in favor of maintaining the maintainability standard. It is a team commitment effort after all.

And for those that feel like they have a lack of power, go talk about it with the seniors, use version control and write tests.

Conclusion

Of course all of my points above could be summarized to a lack of culture in the end. After all if there’s already a high-maintainability development culture, it implies the knowledge and experience is there, the developers already get to spend time on improving maintainability, there are checks in place for consequences and since it’s part of the culture, commitment is inherent (high).

Maintainability is just ‘one of the attributes’ that make up the software quality attributes tree that is often shared in the software engineering community and that is part of ISO25010 & 9001. However in my opinion it is by far one of the most important attributes that directly impact your ability (agility) to address concerns for all of the other attributes.

See also:

https://www.oreilly.com/content/what-is-maintainability/

Kind regards,

Sanchez

Very good article. Thank you very much.