This article describes my journey from a dogmatic junior avoiding unit testing to a pragmatic programmer with a nuanced test strategy. Here it goes.

I have spent most of my focus on backend development in C#. One thing that bothered me for a long time was my attitude towards unit testing. I’ve always vaguely understood the value of it but found myself having friction towards writing them. I also never worked in an environment that had a strong unit test culture. So as a result I basically never wrote them unless I had a nasty bit of complex logic that I thought was too tedious to manually test and I wanted a faster feedback cycle. This was rare though as most backend applications I worked on booted up fast and allowed for fast manual testing (e.g. with Postman or an accompanying UI). Also, a lot of backend endpoints didn’t really have a lot of complex logic that warranted extensive unit testing, I thought.

As a result of writing so few unit tests I did not quite intuitively understand how to write testable code. I thought I knew. Just slam an interface on every (Service) class, avoid static classes and make sure to DI all your dependencies through the constructor and then write a few tests that use a mocking framework to mock all those dependencies and you’re good to go do some AAA’ing1.

Starting with unit testing

At some point while implementing a new feature I wondered, if I want to unit test this, how many tests would I even write? I knew the basics from uni: Cover all the cases that could produce different behavior. That meant the happy flows and the error cases. My uni test courses focused a lot on boundary cases especially. They basically taught me the value in testing all those different cases. However they did not explain the pragmatics of when you should test and when you shouldn’t and also didn’t really explain how to mix different types of automated tests in your codebase other than just unit tests. They said there’s a test pyramid, but all the focus went on unit testing.

So I did some research on my own and found a lot of material that preached about test-driven development. I thought this meant all my logic shalt be covered in unit tests. Answer found, perhaps.

TDD is usually explained as writing tests upfront (test first). However, writing them upfront wasn’t easy for me because I already had these existing codebases, so I figured I’d write them after the fact. Not true TDD but I thought that’d be okay anyway. Surely covering all my logic in unit tests was the only way to get to the magic 80% test coverage that I heard about. I was told that 80% was industry-standard and I as a good programmer boy would want to adhere to the industry-standards of course.

So I dabbled in unit tests with the above mindset quite some times. I couldn’t really get myself to enjoy writing these tests though. Part of the reason was that I was a junior who mostly worked on legacy codebases that had few if any tests to begin with and I had no mentorship. This made getting started with testing quite difficult, among many other problems that I won’t dive deeper into now. But that was not the only reason I disliked unit testing.

Disliking boilerplate test code

One thing I really disliked was all the boilerplate that went into constructing test objects. A lot of codebases I worked on had methods that depended on a few, large, god-like anemic domain models with quite the hierarchy of properties. That meant for a given test you’d have to construct that class and then partially instantiate some of the properties (usually a few nests deep) which keeps other properties uninstantiated because it’s not practical to fully instantiate an instance every time.

I manually constructed these objects in each test because I was told in my uni courses how important it is that a test is self-explanatory and focused when you look at it. So I thought writing reusable code for instantiating that object fully would make the tests “unfocused” on the core properties that had relevancy for that specific test. After all, if the object you’re arranging to be passed as a parameter to the SUT method is a god class with 30 filled properties, how can you easily tell which of the 30 properties is relevant for this test?

I figured the answer was to instantiate only part of the object that is relevant for the SUT method.

Another part of the boilerplate I hated was all the mocking setups. I think we’ve all been there so I won’t dive deeper into that.

Unit tests don’t feel realistic

Another reason I disliked unit testing was because I thought it was non-exhaustive anyway. Like so much more could go wrong in production that wasn’t going to be prevented by unit tests. For example, in many C# codebases the ORM ‘Entity Framework’ is used that could throw exceptions related to entity-tracking upon calling _db.SaveChanges(). You wouldn’t catch these with an unit test if EF was mocked out. Another example is that the email service could throw an exception. Or the File IO operations could fail due to disk space, access rights or simply missing directories/files. But all that stuff, I was taught to just mock it out.

As a result this unit testing all felt so bloated, artificial and kind of tightly coupled.

I didn’t feel like my unit tests were a ‘realistic’ safety net and felt like they were not really helping me other than increasing the coverage metric. So I did some more research. I had already learned about the test pyramid during uni and it doesn’t take much research to find out what you’re ‘supposed to do’ to achieve more realistic tests: integration testing.

Starting with integration testing

This integration testing stuff was confusing though. I liked the idea of more realistic tests but I was taught that you shouldn’t use integration tests to exhaustively test features. ‘Write fewer integration tests than unit tests’, ‘mind the test pyramid’, explained my teachers. Now I felt a bit uncertain.

When do I unit test something and when do I integration test something? Wouldn’t my integration tests make my unit tests redundant because what is in the integration test is also partially tested in the unit test?

I didn’t want to write unit tests that overlap with my integration tests as that felt redundant and I already didn’t really like testing because it didn’t give me the good dopamine juice that finishing a feature and seeing it work after a manual test did.

Analysis paralysis aside, I began. Writing integration tests for the first time I came across an issue. While the boilerplate was relatively small compared to unit testing (I was using ASP.NET Core TestHost), these integration tests were kind of slow to run.

I had tried to make my integration tests as realistic as possible and I invested in full isolation between integration tests. I ran them using the same DBMS as prod (SQL Server) instead of running them in-memory. Each test cleared most of the SQL Server DB and then reseeded it. As a result every test took about 1.5 seconds each.

But hey, I did value these tests a lot more than my unit tests. And while researching I learned about the test diamond which meant other people valued integration testing over unit testing as well.

As a result I felt like integration tests were the answer and used them for exhaustive testing of complicated features and I took the stance that unit tests should only be used in exceptionally rare situations.

I still couldn’t really shake the feeling however that automated testing felt like a waste of time…

Why did I still feel like automated testing in general was a waste of time?

Well usually I went and manually tested my features during development and when I was done I knew I tested all possible paths (most features allowed this).

So imagine this: I work hard on the feature, I manually test it exhaustively and find it’s all working which means finally I’m done. If I now go write tests for it that’s not really going to give me any dopamine happy juice now, because why would it? I already know it works (right now). I’d just be putting in more minutes of my day to assert that what I already know: the feature is working. Instead, I could be spending my time doing other things that do provide dopamine.

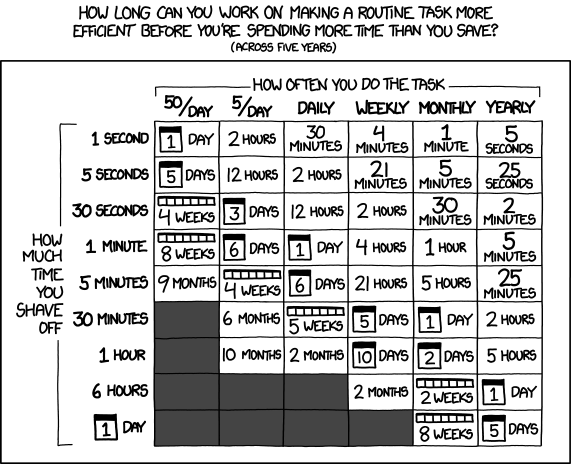

“But what about when you change that piece of software? Do you go through all possible paths again?” Well that depended on how long it’d take me go through all the possible paths and how often I’d need to change that code. If I expected a change to that code to be rare (once every 6 months or less) and it took me 1 or 2 mins to go through all the possible paths, I’d likely just do that and skip writing tests altogether. Otherwise yes, I would likely have opted to automate the test process.

So to me it was frequently a cost-benefit analysis of whether it’s worth the time-friction investment of writing the test. A bit like the xkcd below.

Broken windows theory

A more subtle reason why I felt like automated testing was a waste of time was the poor code hygiene environment that I found myself working in. This is the broken windows theory at play here. I would be discouraged from writing tests because I felt like the code quality was already very poor to begin with.

In a particular case I had manually tested for more than an hour to assert various cases and I didn’t write any tests for that test process. I didn’t add any unit tests because the feature I added was woven into legacy code and I didn’t see an easy way to refactor that code to make it testable. I didn’t add any integration or E2E tests because I was fed up with that codebase and feature.

In hindsight adding tests anyway would’ve been the professional, right thing to do, but I didn’t know nor feel like that at the time.

But writing tests is a professional responsibility

“But how could you not write tests? Tests are documentation for you and (future) maintainers of the codebase” and “they’ll greatly assist in refactoring, because they give you quick feedback when you broke something”. And “TDD drives good codebase design because it allows you to detect design smells early”.

These are arguments I’ve heard in favor of automated testing that I quite like. In fact, these are the arguments that have prevented me from giving up on automated testing and forced me to find a way to change my mindset so I would be more inclined on writing tests. These arguments made me want to think that writing tests should be a professional responsibility, just like a doctor washing their hands before dealing with a patient.

Integration tests do not drive better code design

I noticed that a benefit that testing would bring according to TDD preachers was not present in my integration-tested codebases. The possible benefit is that testing would drive the creation of code that is highly testable and exerts favourable properties like loose coupling, modularity, encapsulation and proper abstraction.

But, when you’re just integration-testing a backend API you get none of that as part of the testing. Because an integration test for a simple stateless JSON API could be just a piece of JSON string you as a test client POST to a specific API endpoint and then you assert the response and/or state of the database afterwards. Input, processing, output. That processing can be very messy code and these tests do not care nor influence this.

That makes them ideal for easy testing of legacy codebases2. But that is also their weakness. If all you do is integration test features, you get a much slower and slower test suite as time goes on and you end up with the same shitty code design as when you started out. Refactoring will be easier though.

And then it clicked…

For a long time I just accepted that as the way it is.

It took me quite some time until I found something which just clicked in my head once I read it. That was the functional core, imperative shell pattern. For me this pattern best explained that what a bunch of concentric architectural patterns like Clean Architecture or Hexagonal Architecture also tried to explain as valuable but always failed to hit home for me. That is the separation of pure versus impure code.

Impure code being everything that has side effects, state or is nondeterministic. Consider this to be File IO, networking and database calls. But also nondeterministic factors like a DateTime clock or Random Number Generators.

Pure code is code that just takes an input and given that input always returns the same output. In theory there can be 10+ C# classes being involved to go from input to output, but that doesn’t matter as long as it’s pure.

I thought this was beautifully pragmatic when I read it. Like a perfect synergy cure to all my pains. The imperative shell would contain the “standard” side-effect heavy code like read/writing IO and from the imperative shell the functional core would be invoked.

Mapping FC-IS to Unit & Integration testing

Now relating that to my unit vs integration test journey earlier I created the conceptual mapping that the imperative shell consists of only the stuff that you would typically mock out anyway in unit tests and is therefore not worth unit testing and should thus be integration tested. And that the functional core would be a prime candidate for unit tests as pure functions are the easiest things to unit test.

This gives me the best of both worlds. I can now design my code to include a functional core, thus having incentive to improve the shitty legacy code so I can easily make it testable and I can easily have fast, exhaustive tests on this functional core. And all the nasty IO, and EF core tracking stuff can be integration tested – which is the only way to properly test this stuff anyway – using only a few non-exhaustive integration tests.

There is no silver bullet

Now of course, there is no silver bullet. The above pattern works great for read-decide-act endpoints, which in my case is the majority of endpoints I deal with. But for endpoints where there’s inherently a lot of back and forth in IO/Networking/DB, aka a fat imperative shell, you’ll have to test these in one of two ways: Exhaustive integration testing or exhaustive unit testing with mocks/fakes.

That tradeoff depends on how accurate you want the tests to be and how accurate you can make them using unit tests, as well as how fast you want the test suite for this endpoint to run and how much you want to drive testable design.

For example if such an endpoint is filled with EF Core tracking (sad days) then I’d opt for integration testing because that behavior is not easily mockable. If an endpoint is just a sequence of network calls and you need to assert that they happen in the right order based on some complex (functional core) logic in between, then separate the functional core logic to a separate pure (public static) method, do mockless unit testing on that and use mocked unit tests for the network calls, which mean the network calls are mocked out and you can assert they’re called in the right order.

The world demands nuance

So now, many years later as a tech lead, I mix 1) unit tests with mocks, 2) unit tests without mocks and 3) integration tests. I also mix OOP with functional programming (FP) concepts.

I suspect my journey is quite typical. Being a dogmatic junior trying to adhere to the rules when starting out. And later becoming pragmatic: realizing the world isn’t just black or white. It’s not perfect. There’s a lot of gray, which can be annoying and initially a bit disappointing because gray always takes more brainpower to compute than black or white. Nevertheless, I urge you to put in that little bit of extra effort to not see everything in binary black or white. Don’t worry too much about that stuff and just enjoy the ride.

Kind regards,

Sanchez

- Arrange, act, assert. The school example of how to structure your unit test methods ↩︎

- If you work in legacy codebases look up characterization tests. They are similarly easy to add because you just have a blackbox processor for which you can’t verify the correct behavior but you just assert the expected outputs that you already know about. They’re essentially change detectors. ↩︎

Leave a Reply